Ivory Research Interface Data Analysis Services.

Projects.

R Tutorial: Data Cleaning and Word Clouds.

Learning Objectives.

From this case study you should learn how to:

- Prepare a series of character string responses (words) for qualitative analysis using the packages in

tidyverse,stopwords, andtm. - Create a vector of a custom

stopwordslist. - Set all characters in a data set to lower case letters using

tolower. - Remove punctuation and extra whitespace from character strings using

gsubandtrimws. - Omit commonly used words (

stopwords) from strings of text. - Split strings of characters into separate rows using

strsplit. - Replace patterns in series of strings using

gsub. - Generate a Word Cloud of most frequent words or concepts in character string responses using the packages

wordcloud2, andRColorBrewer.

Introduction.

More often, qualitative data is varied in its syntax as different respondents communicate with varying levels of committment and abilities. There are respondents that are very verbose, taking every character of the 355 character input field to pour every emotion they have into a response. Other respondents are not well versed in writing, or have the penchant to answer a question in full detail. These respondents will input the first thing that comes to mind and proceed to the next task. It is because of this varied gradient in attitudes and aptitudes of communication that data cleaing for qualitative analyses can become a very involved undertaking.

A survey of 133 people in a college department with regard to research training needs was conducted. These respondents were taking courses that involved business research activities, such as reading market analyses, surveying target customers, and investigating incumbent firms in an industry. The research activities of respondents was further encumbered by restrictions and limitations that the pandemic brought on.

In the survey that was distributed, one of the questions was, "How could the Department help you with your research endeavours?" As expected, the responses ran the gambit from verbose to terse. With this potentially rich source of information, a very simple sentiment analysis based on a frequency could produce a Word Cloud. Data cleaning to find common terms allowed for the identification of common views held among respondents. After cleaning the data, a frequency table of terms used in the responses could be generated. A Word Cloud as a result of the frequency table offers insight as to prevalent or frequently stated sentiments among responses.

A download of the data set that we will work from in this module is available here. Just save this CSV file to a folder that you can reference as we proceed with the module.

Using the Packages tidyverse, stopwords and tm.

As a collection of packages, tidyverse allows for data manipulation and visualizations. Packages in tidyverse are dplyr, ggplot2, tidyr, readr, purrr, tibble, stringr, and forcats. Information on what each of these packages do is available at the Tidyverse website. For the intents and purposes of this tutorial we will exercise a few commands from the tidyverse collection.

To install the tidyverse collection of packages for this module just use the command install.packages(). To use the collection in your current R session, use the command library().

install.packages(tidyverse, dependencies=TRUE)library(tidyverse)

The package stopwords contains pronouns, prepositions, conjugations, verbs, adjectives and the like that do not offer information useful in determining the category of text being classified. These words might not carry significant meaning or occur frequently in open-ended responses.We could think of stopwords as a collection of words in common use. Examples of stopwords are "I", "me", "I'm", "because", "above", "again", "a", or "to". As of April 2021, there are 174 stopwords in English.

To install the stopwords package for this module just use the command install.packages(), again. To use the package in your current R session, use the command library().

install.packages(stopwords)library(stopwords)

The package tm offers text mining processes. These processes range from the generation of term matricies, word conversion, and omission of offensive language. A download for the package manual is available here.

As with the other packages, to install the tm package for this module just use the command install.packages(). Again, to use the package in your current R session, use the command library().

install.packages(tm, dependencies=TRUE)library(tm)

Let's load the data set with the command read.csv(). The data we will use is a CSV of the open-ended responses from a Research Training Needs survey. We will assign a vector name rna.fac.st for the base data set. From there, another vector called requests will just get the open-ended responses. For convenience the data a download of the data set is here.

rna.fac.st <- read.csv("Qual_Responses.csv", header=TRUE)

requests <- rna.fac.st$How.could.the.Department.help.you.with.your.research.endeavours.

When we take a look at the contents of the rna.fac.st vector, we should find 133 rows of three column responses. The first row is X. The second row is Timestamp. The third row asks the question, "How could the Department help you with your research endeavors?" Here, I will just show the head (the first six rows) and the tail (the last six rows) of responses.

head(rna.fac.st)

X Timestamp

1 1 2021/03/25 12:21:35 PM GMT+8

2 2 2021/03/25 12:24:07 PM GMT+8

3 3 2021/03/25 12:24:43 PM GMT+8

4 4 2021/03/25 12:25:26 PM GMT+8

5 5 2021/03/25 12:25:49 PM GMT+8

6 6 2021/03/25 12:26:24 PM GMT+8

How.could.the.Department.help.you.with.your.research.endeavors.

1 Easier Access and the features should be student-friendly

2 by having consultation to help us widen and broaden our ida with other peoples opinion, advice and suggestions

3 The department can help me through guidance and help whenever I have a difficult milestone to surpass in my research

4 access of libraries

5 Maybe give more resources to students which can really be of use to their proposed businesses.

6 Access to more business and technological advancements related research/thesis topics in the online library. More lessons about programs that can help in analyzing survey results.

tail(rna.fac.st)

X Timestamp

128 128 2021/03/26 8:16:03 PM GMT+8

129 129 2021/03/26 8:42:55 PM GMT+8

130 130 2021/03/26 9:17:15 PM GMT+8

131 131 2021/03/26 9:51:43 PM GMT+8

132 132 2021/03/27 2:51:49 AM GMT+8

133 133 2021/03/29 12:44:54 PM GMT+8

How.could.the.Department.help.you.with.your.research.endeavors.

128 provide more data gatherer platforms or applications

129 Collaboration with experts

130 Access to experts through referrals if the school has any connections to such

131 The Entrepreneurship Department can help with the widening of networks and exposure to business trade fairs, seminars, sharing of experiences, etc.

132 The department could help me in building connections to meet different kind of people in the field and learn from them based on their experience and expertise. Hence, it would be great if the industry students would like to pursue will be focused.

133 It help me to conduct a research in a systematical way and provides reliable source and datas

The vector requests isolates the third column, which asks respondents for an open-ended response to the question, "How could the Department help you with your research endevors?" Here, I show the first six rows of the vector. Keep in mind that there are 133 total responses in this data set.

head(requests)

[1] "Easier Access and the features should be student-friendly"

[2] "by having consultation to help us widen and broaden our ida with other peoples opinion, advice and suggestions "

[3] "The department can help me through guidance and help whenever I have a difficult milestone to surpass in my research"

[4] "access of libraries"

[5] "Maybe give more resources to students which can really be of use to their proposed businesses"

[6] "Access to more business and technological advancements related research/thesis topics in the online library. More lessons about programs that can help in analyzing survey results. "

Create a vector of a custom stopwords list.

A vector of stopwords must be created, which will serve as the list of common words that do not help in classifying responses. The 174 stopwords already present in the English list (stopwords("en")) from the package stopwords is a general collection.

r$> stop_words <- stopwords("en")

r$> stop_words

[1] "i" "me" "my" "myself" "we" "our" "ours" "ourselves" "you" "your" "yours"

[12] "yourself" "yourselves" "he" "him" "his" "himself" "she" "her" "hers" "herself" "it"

[23] "its" "itself" "they" "them" "their" "theirs" "themselves" "what" "which" "who" "whom"

[34] "this" "that" "these" "those" "am" "is" "are" "was" "were" "be" "been"

[45] "being" "have" "has" "had" "having" "do" "does" "did" "doing" "would" "should"

[56] "could" "ought" "i'm" "you're" "he's" "she's" "it's" "we're" "they're" "i've" "you've"

[67] "we've" "they've" "i'd" "you'd" "he'd" "she'd" "we'd" "they'd" "i'll" "you'll" "he'll"

[78] "she'll" "we'll" "they'll" "isn't" "aren't" "wasn't" "weren't" "hasn't" "haven't" "hadn't" "doesn't"

[89] "don't" "didn't" "won't" "wouldn't" "shan't" "shouldn't" "can't" "cannot" "couldn't" "mustn't" "let's"

[100] "that's" "who's" "what's" "here's" "there's" "when's" "where's" "why's" "how's" "a" "an"

[111] "the" "and" "but" "if" "or" "because" "as" "until" "while" "of" "at"

[122] "by" "for" "with" "about" "against" "between" "into" "through" "during" "before" "after"

[133] "above" "below" "to" "from" "up" "down" "in" "out" "on" "off" "over"

[144] "under" "again" "further" "then" "once" "here" "there" "when" "where" "why" "how"

[155] "all" "any" "both" "each" "few" "more" "most" "other" "some" "such" "no"

[166] "nor" "not" "only" "own" "same" "so" "than" "too" "very"

This list can be added to by combining it with other character strings. The choice of words to add will depend on the context and wording of the question responses are for. In this case, the question was asked to members of a collge entrepreneurship department. Hence, words as "students", "department", "help" and the like will not provide this simple sentiment analysis much insight as these would be extrememly repeated words in the the aggregate of responses. Here, we add to the stopwords list. Additions to this vector use the operation c(), which adds more words to the stopwords("en") ("en" for English) list. To the list are added words as "us", "maybe", "probably", "business", "entrepreneurship" and the like. With the addition of words, the list has expanded to 214 stopwords.

r$> stop_words <- c(stop_words, "us", "maybe", "can", "helps", "help", "will", "come", "new", "students", "student", "different", "become", "becoming", "also", "put", "able", "probably", "needs", "joining", "looked", "look", "give", "business", "entrepreneurship", "department", "right", "guess", "etc", "it", "way", "easy", "easier", "use", "really", "basically", "whenever", "get", "like", "researches", "research")

r$> stop_words

[1] "i" "me" "my" "myself" "we" "our" "ours"

[8] "ourselves" "you" "your" "yours" "yourself" "yourselves" "he"

[15] "him" "his" "himself" "she" "her" "hers" "herself"

[22] "it" "its" "itself" "they" "them" "their" "theirs"

[29] "themselves" "what" "which" "who" "whom" "this" "that"

[36] "these" "those" "am" "is" "are" "was" "were"

[43] "be" "been" "being" "have" "has" "had" "having"

[50] "do" "does" "did" "doing" "would" "should" "could"

[57] "ought" "i'm" "you're" "he's" "she's" "it's" "we're"

[64] "they're" "i've" "you've" "we've" "they've" "i'd" "you'd"

[71] "he'd" "she'd" "we'd" "they'd" "i'll" "you'll" "he'll"

[78] "she'll" "we'll" "they'll" "isn't" "aren't" "wasn't" "weren't"

[85] "hasn't" "haven't" "hadn't" "doesn't" "don't" "didn't" "won't"

[92] "wouldn't" "shan't" "shouldn't" "can't" "cannot" "couldn't" "mustn't"

[99] "let's" "that's" "who's" "what's" "here's" "there's" "when's"

[106] "where's" "why's" "how's" "a" "an" "the" "and"

[113] "but" "if" "or" "because" "as" "until" "while"

[120] "of" "at" "by" "for" "with" "about" "against"

[127] "between" "into" "through" "during" "before" "after" "above"

[134] "below" "to" "from" "up" "down" "in" "out"

[141] "on" "off" "over" "under" "again" "further" "then"

[148] "once" "here" "there" "when" "where" "why" "how"

[155] "all" "any" "both" "each" "few" "more" "most"

[162] "other" "some" "such" "no" "nor" "not" "only"

[169] "own" "same" "so" "than" "too" "very" "us"

[176] "maybe" "can" "helps" "help" "will" "come" "new"

[183] "students" "student" "different" "become" "becoming" "also" "put"

[190] "able" "probably" "needs" "joining" "looked" "look" "give"

[197] "business" "entrepreneurship" "department" "right" "guess" "etc" "it"

[204] "way" "easy" "easier" "use" "really" "basically" "whenever"

[211] "get" "like" "researches" "research"

Set all characters in a data set to lower case letters using tolower.

Looking again at the requests vector, you will notice the presence of upper case and lower case letters. To facilitate a more efficient processing of this character data, we should bring consistency to the characters in responses. The command tolower() takes a character vector and forces all characters to lower case. Here, we apply the command to the vector requests. As this is the start of our data cleaning process we designate a vector name indicative of its step in the cleaning process. In this case, the vector is named req.01. Notice, that in the print of req.01 all characters are coerced to lower case.

Remove punctuation and extra whitespace from character strings using gsub and trimws.

Looking again at the requests vector, you will notice the presence of upper case and lower case letters. To facilitate a more efficient processing of this character data, we should bring consistency to the characters in responses. Here, we show the first six rows from the processed vector.

r$> req.01 <- tolower(requests)

r$> head(req.01)

[1] "easier access and the features should be student-friendly"

[2] "by having consultation to help us widen and broaden our ida with other peoples opinion, advice and suggestions "

[3] "the department can help me through guidance and help whenever i have a difficult milestone to surpass in my research"

[4] "access of libraries"

[5] "maybe give more resources to students which can really be of use to their proposed businesses."

[6] "access to more business and technological advancements related research/thesis topics in the online library. more lessons about programs that can help in analyzing survey results. "

Compare this to the previous iteration of the same vector.

head(requests)

[1] "Easier Access and the features should be student-friendly"

[2] "by having consultation to help us widen and broaden our ida with other peoples opinion, advice and suggestions "

[3] "The department can help me through guidance and help whenever I have a difficult milestone to surpass in my research"

[4] "access of libraries"

[5] "Maybe give more resources to students which can really be of use to their proposed businesses"

[6] "Access to more business and technological advancements related research/thesis topics in the online library. More lessons about programs that can help in analyzing survey results. "

Remove punctuation and extra whitespace from character strings using gsub and trimws.

Now, let's remove all punctuation in the responses. To do this, we use the command gsub() to substitute characters and strings with other values. This is the second step in the cleaning process. As such, a new vector called req.02 is created to reflect this new step. As this second step draws from information in the first, we reference the vector req.01 (the output of the first step). Again, we show just the first six rows of output. Notice that the period and hyphen were removed.

r$> req.02 <- gsub("[[:punct:] ]+", " ", req.01)

r$> head(req.02)

[1] "easier access and the features should be student friendly"

[2] "by having consultation to help us widen and broaden our ida with other peoples opinion advice and suggestions "

[3] "the department can help me through guidance and help whenever i have a difficult milestone to surpass in my research"

[4] "access of libraries"

[5] "maybe give more resources to students which can really be of use to their proposed businesses "

[6] "access to more business and technological advancements related research thesis topics in the online library more lessons about programs that can help in analyzing survey results "

There are responses with extra whitespace at the ends of a sentence. Responses in rows [2], [5], and [6] are examples of this condition. The command trimws removes extra spaces that occur at the start and end of a character string.In this third step of the cleaning process, it is necesary to remove extra whitespace. Here, we create a vector req.03 that trims the extra whitespace from req.02 (the output of the second step). Notice, that leading and trailing whitespaces are removed. Again, the first six lines of are shown for brevity of presentation.

r$> req.03 <- trimws(req.02)

r$> head(req.03)

[1] "easier access and the features should be student friendly"

[2] "by having consultation to help us widen and broaden our ida with other peoples opinion advice and suggestions"

[3] "the department can help me through guidance and help whenever i have a difficult milestone to surpass in my research"

[4] "access of libraries"

[5] "maybe give more resources to students which can really be of use to their proposed businesses"

[6] "access to more business and technological advancements related research thesis topics in the online library more lessons about programs that can help in analyzing survey results"

Omit commonly used words (stopwords) from strings of text.

In the fourth step of our data cleaning exercise, stopwords must be removed. The command removeWords is applied to req.03. From the vector req.03 stopwords contained in the vector stop_words will be removed. As this is the fourth step a vector named req.04 is created to hold the output from removeWords(). Notice that after stopwords are removed there exists extra spaces between the words that remain.

r$> req.04 <- removeWords(req.03, stop_words)

r$> head(req.04)

[1] " access features friendly"

[2] " consultation widen broaden ida peoples opinion advice suggestions"

[3] " guidance difficult milestone surpass "

[4] "access libraries"

[5] " resources proposed businesses"

[6] "access technological advancements related thesis topics online library lessons programs analyzing survey results"

Replace patterns in series of strings using gsub

To remove the extra spaces between words we can use gsub() again to replace these multiple spaces with a single space. By creating a regular expression (regex), prefixed by a \, we can identify spaces, denoted by \s. An occurence indicator +, also known as a repetition operator, indicates the occurence of the previous expression (\s) more than once. The regex \\s+ tells the gsub command to look for occurrences of one or more spaces. The second option in the gsub command, indicates the string that replaces the pattern stated in the first option (\\s+). This replacement process is applied to vector req.04. As this is the fifth step in this data cleaning process, we create a vector req.05. A sixth step removes any resulting leading and trailing whitespaces in vector req.06.

r$> req.05 <- gsub("\\s+", " ", req.04)

r$> head(req.05)

[1] " access features friendly"

[2] " consultation widen broaden ida peoples opinion advice suggestions"

[3] " guidance difficult milestone surpass "

[4] "access libraries"

[5] " resources proposed businesses"

[6] "access technological advancements related thesis topics online library lessons programs analyzing survey results"

r$> req.06 <- trimws(req.05)

r$> head(req.06)

[1] "access features friendly"

[2] "consultation widen broaden ida peoples opinion advice suggestions"

[3] "guidance difficult milestone surpass"

[4] "access libraries"

[5] "resources proposed businesses"

[6] "access technological advancements related thesis topics online library lessons programs analyzing survey results"

There are times where some terms are stated as verbs or even adjectives but allow for classification in a particular category or concept. In instances like these, it will be necessary to replace variations of certain words with their root concept or term. One way to do this is with the command gsub(), where a pattern is replaced by a designation. For this use of gsub we replaced the string "providing" with the designation "provide". This pattern replacement is applied to vector req.06. As this is the seventh step of the process a vector req.07 contains the output from gsub. Here, a comparison between steps 6 req.06) and seven (req.07) show the effect of the gsub pattern replacement of "providing" on response [125]. An eight step (req.08) uses gsub again to replace all spaces denoted by " " with a comma, ",". For brevity, the first six rows of req.08 are shown.

r$> req.07 <- gsub("providing", "provide", req.06)

r$> req.07[125] # This is step 7.

[1] "provide proper constructive criticism"

r$> req.06[125] # This was step 6.

[1] "providing proper constructive criticism"

r$> req.08 <- gsub(" ", ",", req.07)

r$> head(req.08)

[1] "access,features,friendly"

[2] "consultation,widen,broaden,ida,peoples,opinion,advice,suggestions"

[3] "guidance,difficult,milestone,surpass"

[4] "access,libraries"

[5] "resources,proposed,businesses"

[6] "access,technological,advancements,related,thesis,topics,online,library,lessons,programs,analyzing,survey,results"

Split strings of characters into separate rows using strsplit.

As all words in each response were separated by a comma in the eight step, use of strsplit will facilitate the creation of a frequency table. Splitting strings into different rows allows for a frequency count of terms. The command strsplit() will split a character string into separate strings as demarcated by some symbol or space. A new vector x is created to hold the output from the strsplit command on req.08. Here, the first six rows of req.08 as affected by strsplit are shown. Notice that each word is now an individual string as denoted by the surrounding quotations (""). This resultant vector is coerced to a data frame by data.frame where each word is held in a separate row within a column named Term. The function unlist simplifies a list (in this case vector x) that contains the individual elements of a vector.

r$> x <- strsplit(as.character(req.08), ',')

r$> head(x)

[[1]]

[1] "access" "features" "friendly"

[[2]]

[1] "consultation" "widen" "broaden" "ida" "peoples" "opinion" "advice" "suggestions"

[[3]]

[1] "guidance" "difficult" "milestone" "surpass"

[[4]]

[1] "access" "libraries"

[[5]]

[1] "resources" "proposed" "businesses"

[[6]]

[1] "access" "technological" "advancements" "related" "thesis" "topics" "online" "library" "lessons"

[10] "programs" "analyzing" "survey" "results"

r$> x.df <- data.frame(Term = unlist(x))

r$> head(x.df) # Notice that each word is in its own row.

Term

1 access

2 features

3 friendly

4 consultation

5 widen

6 broaden

To create the frequency table, the command table generates a count of all rows with the same spellings. We will send this frequency table to a vector named x.terms. The x.terms vector is descendingly ordred according to the second column, Freq. This ordered table is placed in a vector named x.terms.1.

r$> x.terms <- data.frame(table(x.df))

r$> head(x.terms)

x.df Freq

1 1 3

2 19 1

3 accept 2

4 access 21

5 accessed 1

6 accessibility 1

r$> x.terms.1 <- x.terms[order(-x.terms[,2]),]

r$> head(x.terms.1)

x.df Freq

432 provide 25

4 access 21

280 information 20

306 knowledge 14

378 online 13

279 industry 12

Interpretation

Just from this frequency table we begin to see that respondents are asking for the department to provide resources as access and information. Futhermore, it appears that respondents want materials online and address matters of industry.

Generate a Word Cloud of most frequent words or concepts in character string responses using the packages wordcloud2, and RColorBrewer.

To generate a Word Cloud we will use the package wordcloud2 coupled with color palettes from the package RColorBrewer. The package wordCloud2 allows for the creation of Word Clouds in HTML. Color palettes in RColorBrewer facilitate color choices for graphics.

Installing wordcloud2 and RColorBrewer is facilitated by the command install.packages(). Activating these packages in your current R session is done using the command library().

install.packages("wordcloud2")

library(wordcloud2)

install.packages("RColorBrewer")

library(RColorBrewer)

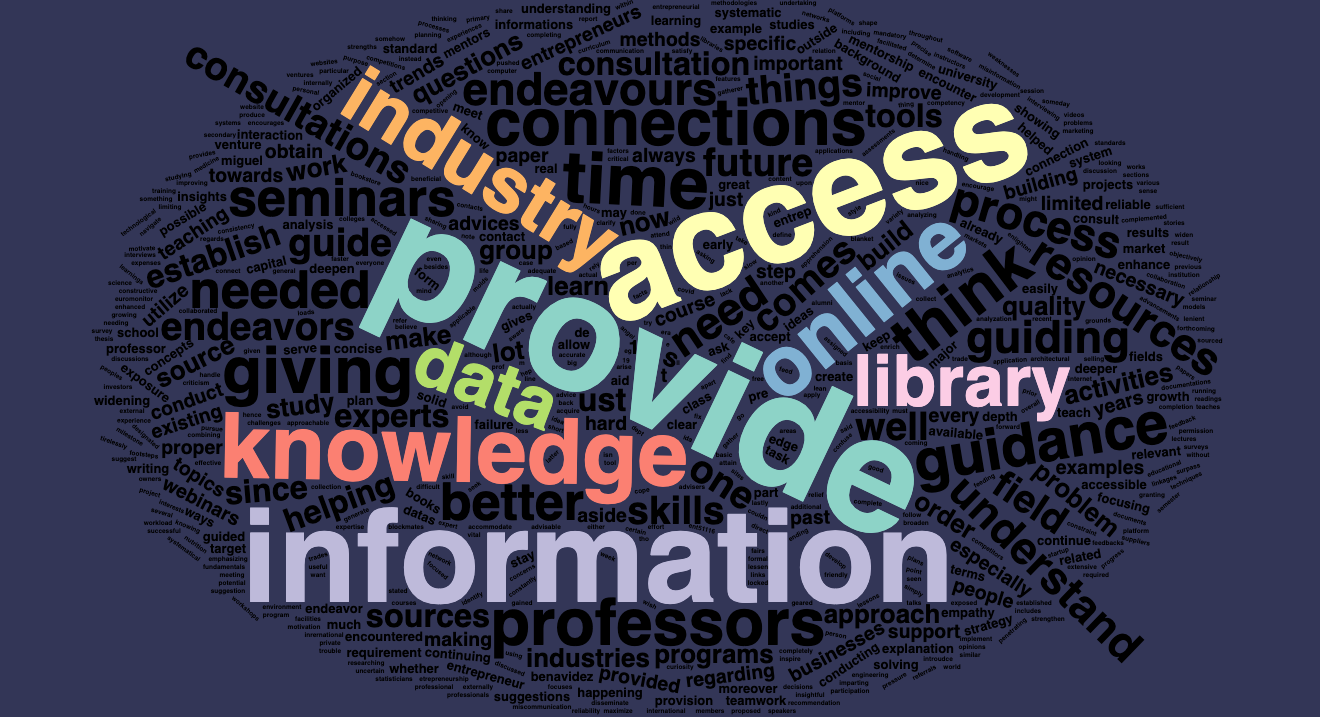

Let's create a vector to hold the Word Cloud. This vector is named student.words.2. This vector will hold output from the command function wordcloud2. The Word Cloud will be based on data from the vector x.terms.1. Words will be in Helvetica font (fontFamily). Font size for the words will be 0.9. The background (backgroundColor) of the HTML will be #333657. Colors will be set by the RColorBrewer palette Set3, as called by brewer.pal. From the Set3 palette, eight (8) colors will be used.

student.words.2 <- wordcloud2(data=x.terms.1, fontFamily='Helvetica', backgroundColor="#333657", size=0.9, color=brewer.pal(8, "Set3"))

student.words.2

The resulting output should look something like the following.

Interpretation

In light with other investigations of the department, a series of key points can be drawn from this Word Cloud.

- Students are expecting the Department to provide resources as seminars, opinions and insights, as well as analysis tools.

- Students are asking for more free access to information and data.

- Students are asking for information access online, from the library.

- Students are asking for the department to expose them to industry.

- Students are asking for connections to industry partners.

- Students are asking for knowledge in research skills.

Conclusion.

In this module we discussed how to clean data from open-ended survey responses. From the data set, we set all characters to lower case, removed stopwords, removed extra whitespaces from strings, as well as generated a frequency table.

As the year progresses I will add more modules. If your organization would like a live tutorial or a series of analyses done on any data sets, please contact me by email.